Ipsative Assessment: Growing Students’ Knowledge, Skills and Self-Esteem

In the lead-up training for the Olympic games athletes and swimmers are primarily guided by the measurements of their personal-best performances. While external measures are kept in mind, coaches wanting to get the best performances from the athletes and swimmers that they coach, are directed by personal performances, as defined by personal-best times. If this evaluative methodology is good enough to motivate improvement in potential Olympians, then one must ask why schools don’t prioritise this methodology in their assessment and reporting programs.

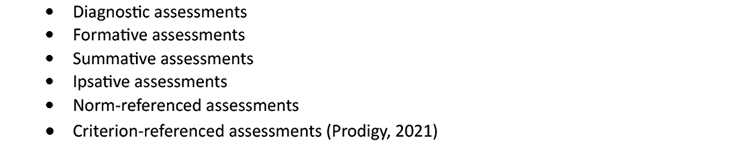

Assessing student performance is a key task for teachers who use summative and formative assessments as key parts of assessing learning. However, assessment is broader than Michael Scriven’s dichotomy. Prodigy (2021) listed six types of “assessment as learning” in Table 1.

Table 1 Six Types of Assessment as Learning

The term “ipsative assessment” has been borrowed from psychology, and Raymond Cattell used the term in 1944 in his article: “Psychological measurement: normative, ipsative, interactive”. William Clemans’s (1966) explanation of ipsativity, was: “Any score matrix is said to be ipsative when the sum of the scores obtained over the attributes measured for each respondent is constant” (p. 4). In explaining ipsative assessment in education, Prodigy (2021) made the point: “Ipsative assessments are one of the types of assessment as learning that compares previous results with a second try, motivating students to set goals and improve their skills.” This ipsative focus on comparison and improvement overlaps with the use of formative assessment, to a degree. However, when managed properly, the ipsative process re-asserts the individual, longitudinal achievements of the personal-best rather than the less personalised normative comparisons that dominate classroom reporting.

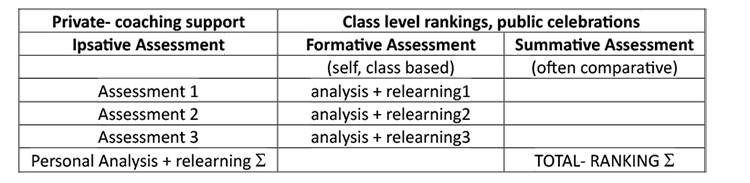

Table 2 Interface Between Ipsative, Formative and Summative Assessments

In Table 2, the ipsative data can generate both formative and summative responses to assessment results and as such serves an integrative purpose in classroom assessment, within a teaching and learning context. Importantly, Gwyneth Hughes (2012, p. 72) reiterates that the ipsative assessments are only compared against that student’s results: 'Put simply, an ipsative assessment is a comparison with a previous performance; it is a self-comparison. Phrases such as assessment against oneself, self-referential assessment and progress reports all capture the essence of ipsative assessment.'

Assessment and Joy

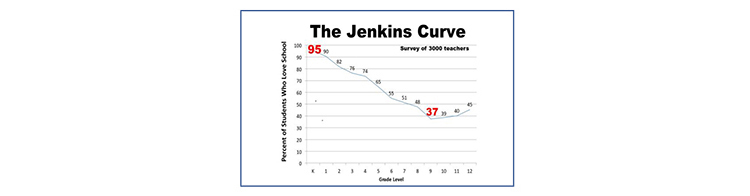

Throughout one’s life, there is a natural joy in learning, but this is often dampened in schools for a variety of reasons, including the reporting of students’ achievements in a comparative context. The highly respected Jenkins Curve (2022, p. 10) makes the point that children start their education full of enthusiasm, but as they progress that enthusiasm fades badly. While there are many factors at play in this loss of student enthusiasm, intrinsic motivation is a major result.

Figure 1 The Jenkins Curve: Students’ Love of Schooling

Gwyneth Hughes, impressively draws attention to a neglected aspect of assessment in a world dominated by the formative-summative dichotomy, and normative testing. She warned:

‘However, there is third, little documented method for referencing an assessment: self referencing or ipsative assessment. Ipsative assessment means that the self is the point of reference and not other people or external standards, and personal learning and individual progress replace the competitive and selective function of assessment’ (Hughes, 2014, pp. 71-72).

Hughes continued that defining ipsative assessment: ‘… means making a distinction between progress and achievement, exploring the differences between ipsative summative and ipsative formative assessment and explaining how ipsative assessment is longitudinal and requires a holistic curriculum design’ (p. 72).

This assessment paradigm fits the inclusive, growth focussed model described by Lee Ann Jung.

Lee Ann Jung: Assessing Students, Not Standards

In her newly published (2024) book, Lee Ann Jung, a clinical professor at San Diego State University, reminded teachers that they are assessing students, not just standards, in their teaching practices, a principle that underwrites ipsative assessment. The author is expert in the field of inclusive education, but to silo the knowledge and skills that she presents would indicate a failure in recognising that every strategy she provided can be employed in every teaching situation, with every student. And, the respectful, educational relationships between teacher and students underwrites ipsative assessment.

At a time when we see the national testing programs dictating what happens in schools and classrooms, this is a timely warning to rethink the purpose of school-based assessment. An important point that this author makes is that understanding of formative assessment has “morphed” and she observed: “Our mislabeling of assessment types as formative or summative isn’t accurate, and worse, sends the message that less formal evidence of learning doesn’t count” (p. 31). In this national testing context, only summative testing results are seen as valid, and the learning growth records of formative testing are discarded.

Exacerbating the issue of testing and public reporting is the reduction of a student’s achievement for the semester to one letter, or one number, which fails to reveal the depth of understanding and effort. An assessment method favoured by Jung is that of a conversation between a teacher and student and she listed six advantages: It is interactive; it is natural; and feels like sharing; it doesn’t feel like judgement; and there is not the same pressure as normative testing (p. 131). Promoting mastery experiences result in feelings of success for the student, which is what Carole Dweck refers to a growth mindset. The alternative of failure, and the threat of failure, damages students’ senses of self-efficacy, and motivation and Lee Ann Jung notes that this “… calls into question the logic of threatening students with poor grades to motivate them to make an effort, doesn’t it?” (p. 126).

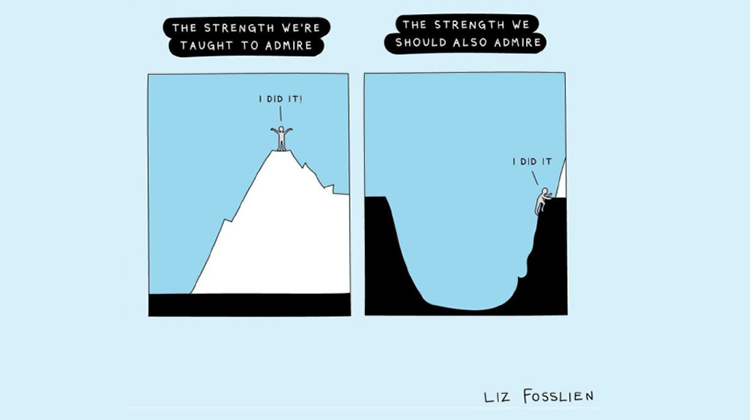

The illustration (main) by Liz Fosslien summarises Lee Ann Jung’s thoughts, pointing out that every student can achieve a learning personal-best, and that is something that should be celebrated and embedded in our educational planning.

The Accountability Dilemma in Schools

In reality, classroom teachers use ipsative assessments as part of their everyday teaching programs, but the normative/comparative measure of external accountability are ever lurking. For the classroom teachers encouraging and motivating learners to develop their personal -best in any learning task is their prime intent. However, when it comes time to write the students’ Semester Reports (Report Cards) then there is an insistence that alphabetic ratings are moderated, and norm-based. So, an ‘A’ in one reporting classroom is, with moderated oversight, of the same standard as every other ‘A’ in the state, which is a practical impossibility. The point that we make is that state and national-level comparability ratings that are delivered on students’ reports have a level of validity that renders them misleading.

Second, assessment results are often made public knowledge, and in schools the ‘car park mafia’ soon have students in classrooms ranked - an irksome display of personal test results being outed. Lee Ann Jung noted: ‘Reporting with a focus-on growth gives us the ability to be fully inclusive of every student’s learning, with the option to show specific strengths far beyond what a typical 4-point scale can capture …’ (p. 228).

Third, in terms of state-level school accountability the only currency that is recognised is the normative results of national testing. This means that most schools adopt hybrid systems that present the normative assessment results accompanied by other results from school-based initiatives such as happiness ratings, and social-emotional learning measures.

Case Study: Elementary Math Mastery (EMM) and Ipsative Improvement

In her doctoral research, Rhonda Farkota (2003) examined the effect of a Direct Instruction program, Elementary Math Mastery (EMM), on students’ mathematical self-efficacy and achievement. Under the nomenclature of self-efficacy, Albert Bandura (1986) championed the role confidence plays in the learning process, claiming personal performance, being based on personal experience, was the most influential source of self-efficacy. He also highlighted self-efficacy as a powerful motivational force.

Within an ipsative context, the experimental treatment, EMM, required students to monitor their daily progress toward mastering academic goals. This helped to modify students' self-efficacy beliefs. As they achieved goals, they came to realise they were capable of performing the tasks, which boosted their confidence for future tasks. Continuous self-evaluation was a vital component of the EMM lessons. The questions gradually increased in difficulty, providing clear criteria for students to self-assess and monitor progress independently.

Generally speaking, students do not enjoy taking tests, so the EMM lessons were specifically designed as mastery lessons, not to be perceived as formal tests. Student responses, however, provided teachers with reliable daily diagnostic information similar to that acquired from a formal test situation. It was found that providing daily personal feedback to the students made them aware of their progress, strengthened self-efficacy, sustained motivation, and improved academic achievement.

The EMM program consists of 160 scripted lessons, each comprising 20 strands. For diagnostic purposes, lessons were structured in rounds of five. The first lesson of each round introduced a new concept, and the last lessons tested these concepts. The student workbook was a personal academic journal designed for data collection and analysis, crucial steps on the path to mastery. It was organised so that students would record their scores in a personal matrix. Each matrix included a round of five lessons and 20 sub-categories (strands) for the student to record, analyse, extract, and report from. Students’ personal data analysis included presenting visual representations such as cumulative frequency tables, comparative bar graphs, and pie charts.

The teacher's role in presenting the EMM program was to deliver the lesson, diagnose any issues, and debug. Students recorded and represented their data daily in the assigned workbook and reported bugs (a bug being an incorrect response where the student failed to understand their error). The student workbook provided for the students’ self-analysis, reflection on personal growth in knowledge, understanding, and achievement, and mapping of performance. Note that students here concentrated on personal growth rather than comparing themselves with other students. When assessing progress, students discussed with the teacher their strand of strength, the strand they most needed to improve, and how to increase their overall scores. Importantly, pinpointing and tracking student-identified bugs enabled the teacher to plan appropriate student support where required.

As students engaged in the EMM program, they learned which actions produced positive growth results and were thus provided with a guide for future lessons. It was also found the anticipation of desirable results motivated students to persevere. These findings validate the research literature, clearly showing that ipsative assessment, goal-setting and self-efficacy are important factors in academic achievement. By providing specific, challenging, yet attainable short-term goals, the EMM program effectively enhanced students’ self-efficacy. Short-term goals provided clear standards against which they could measure progress, and the mere perception of progress strengthened their self-efficacy motivating them to continually improve.

This case study, coupling structured DI teaching with student-directed learning through personal data collection and analysis within an ipsative context, provides solid support for the proposition that Ipsative assessment is a powerful learning adjunct and strong motivational force.

*Elementary Math Mastery (EMM) https://mathmasteryseries.com.au

Discussion

It is unfortunate that the people who were successful in education, and who now determine what education should look like, see education as a public ranking device, with a Hunger Games quality. The unnecessary public ranking of students in classroom and schools means that the majority of students find this practice demeaning, which adds weight to what the Jenkins Curve is telling us.

What makes ipsative assessment different and useful is that it is personal and not shared beyond the tester and testee, which comes from the confidentiality underwriting the term’s psychological provenance. In classrooms the ipsative results are often related to personal best efforts and mastery, and that marks this assessment as different from formative assessment. Classroom rankings and classroom targets displayed on pinup boards do not fit the ipsative assessment philosophy.

What we like about Lee Ann Jung’s assertive statement is that the caring and respectful relationships between teachers and students should be a part of every school-based relationship, which then facilitates stronger educational growth.

Liz Fosslien’s illustration encapsulates the celebratory dilemma that teachers and schools often face, and it comes back to a sense of balance. However, the structures and processes of reporting personal-best type improvements need a lot of promotion and development at all levels of education if they are to be seen as important as “Dux of the School or Class” celebrations.

It is clear that most schools operate in a hybrid assessment environment. The challenge that ipsative assessment poses for teachers and schools is how to manipulate the hybrid model to foster learning joy and the mastery of knowledge, skills and beliefs that are essential for a strong, creative life for students.

We have written this paper because it adds an important dimension to the way teachers and schools think about assessment, and the ipsative approach builds student knowledge in a safe, encouraging manner, and develops students’ joy of learning. In the post-COVID era, we need to look at new ways to re-engage students in schooling, and ipsative assessment is one small step in making schools great for all students.

References

Adams, G. L., & Engelmann, S. (1996). Research on direct instruction: 25 years beyond DISTAR. Seattle, WA: Educational Achievement Systems.

Bandura, A. (1986). Social foundations of thought and action: A social cognitive theory. Englewood Cliffs, NJ: Prentice-Hall.

Cattell, R.B. (1944). Psychological measurement: Normative, ipsative, interactive. Psychological Review, 51(5), 292-303.

Clemans, W. V. (1966). An analytical and empirical examination of some properties of ipsative Measures. Psychometric Monograph, 14. Psychometric Society. http://www.psychometrika.org/journal/online/MN14.pdf

Farkota, R. M. (2003). Effects of direct instruction on self-efficacy and achievement. Monash University. Thesis. https://doi.org/10.4225/03/586eef219232

Hughes, G. (2014). Ipsative assessment: Motivation through marking progress. Springer.

Jenkins, L. L. (2022). How to Create a Perfect School (2nd ed.). Scottsdale, Arizona, USA: LtoJ Press.

Jung, L. (2024). Assessing students, not standards: Begin with what matters most. Corwin.

Prodigy (2021, September). 6 types of assessment (and how to use them). Prodigy. https://www.prodigygame.com/main-en/blog/types-of-assessment/

Schunk, D. H. (1982). Effects of effort attributional feedback on children's perceived self-efficacy and achievement. Journal of Educational Psychology, 74, 548-566.

Permission to publish the Liz Fosslien illustration given by Liz 27th June, 2024.