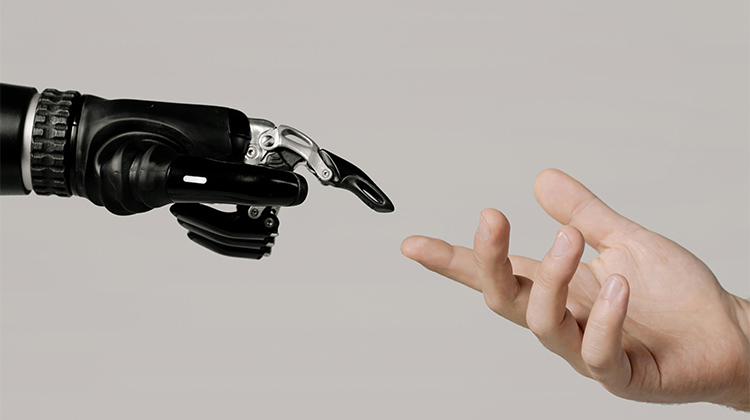

The Ethical ‘Robot’

Our future AI masters have us both fascinated and terrified at the same time. The accelerating rise and influence of Artificial Intelligence has spurred an emotional explosion among educators and society in general. The rainbow of these emotions range across denial, resignation, consternation, fear, anger, disbelief, enthusiasm, accommodation, excitement, anticipation and outright revulsion. As a semi-retired principal/educator now consulting and advising a large number of teachers and educational leaders, I have cause to reflect on this wide disparity and mixed perception of what is happening now and what is to come.

There should be no doubt that the current generation of Gen AI applications provide both useful time-saving tools for educators which are embraced consistently by both new wave generation teachers, while more slowly permeating through the ranks of experienced teachers. Practical professional learning provides a convincing experience for the uninitiated in how well many of the more repetitive design and administrative tasks can be ‘short-cut’ with a simple application of Chat GPT or Copilot or any of the more specifically tailored AI tools. I have seen genuine sceptics transformed into full embrace with the most rudimentary of demonstrations.

At the same time a significant ‘rump’ of concern underlies both the users and the holdouts, focused on the undeniable persistence of some student use or abuse of Gen AI as a work-around to their own thought and creativity. Student submitted work may be wholly their own, or (increasingly acceptable) AI influenced, or actually wholly AI generated.

Suspicions abound. This is particularly evident when an otherwise previously unskilled student with access to higher end proprietary apps submits a magnificent assessment task worthy of post-graduate recognition. The assessor’s uncertainty morphs immediately into strategies to seek out, discount and shame the ‘plagiarist’. The ‘arms race’ between unscrupulous creators and detection tools is also in full flight. The age-old war against intellectual theft has risen to a new and scary dimension among the classrooms of the world. In the digital world on which some up-speed teachers rely, detection of AI generation is running alongside in the race, though always just a few time steps behind.

So the practical and ethical concerns are real, while at the same time, the advantages of well-understood and ethical application of Gen AI are increasingly apparent. This, notwithstanding that ultimately the less ethical use of it will also permeate into a segment of the ranks of human educators as well.

While all of these considerations are foremost in the minds of my colleagues in education, they are, but only the outermost layer of a much deeper skinned onion of angst which permeates all levels of society. This concern is built on the backs of uncertainty about both the future of individual careers and the future of human rights, values and even existence. It is founded in sci-fi origins, epitomised by Terminator and Matrix canons of film and nurtured by the stereotyped media images of Musk and tech moguls usurping increasing access and power. These saplings of scepticism burst upwards for each individual whenever AI makes a new leap forward, makes a mistake (e.g. an AI driven self-driving automobile crashes into a road sign) or reveals the depth of its awareness of our confidential information. How long till we all lose our jobs, have no privacy at all, and become increasingly worthless and sidelined by high functioning AI bots?

I had a small existential moment of experience a couple of decades ago when I played as a B grade player for a local chess team and club with numerous tournaments. At the time chess computers were in their infancy and one member regularly brought various grades and models of them into the club to participate in tournaments. Our best club players’ ratings were just short of expert or national master level, and for more than a year, the computer results languished in the middle of the pack. Then one quiet Monday evening the latest version of the device beat our top player in a fair competition game. There was silence and contemplation. Was this the end of our sport as we knew it?

Some years later in 1997, of course the much more sophisticated program, Deep Blue, won against Grandmaster and world champion, Gary Kasparov in a six game match and the whole chess world shuddered under the blow. But…human chess did survive, and AI chess bots are now well integrated into supporting and advancing human chess play. Arny’s terminator is nowhere to be seen except on the rarest occasions when some clever and deceitful human finds a way to circumvent the accepted safeguards and use the chess programs to cheat in real time play.

Optimists among us routinely reference similar historical precedents of human technological developments to calm our fears. The very origins of the now pejorative term Luddite lie in concerns about the arrival of mechanical looms in the textile industry. Some workers, of course, suffered, but the world of work survived and labour conditions improved. From there the steam engine, the industrial revolution, the nuclear era, and the digital era have all transformed our lives for better and worse, but we managed to adjust to the technology mostly to our benefit. In my life the internet was going to make us all smarter and wiser by giving everyone access to all the knowledge of civilization. It may have done just the opposite. Spellcheck was going to wipe out our capacity to spell (perhaps a bit, but not disastrously so), and emails would wipe out postal and delivery services. Amazon might disagree. Zooming and Facetime would diminish human connection. I still attend parties and conferences in person, but now have access to many more, and work-from-home is opening new opportunities for many.

Still, one can’t help but feel apprehensive that an increasingly omniscient and potentially omnipotent AI presence might be just a bit more threatening than any of these, given that none of the other technologies have challenged our human role as the apex predator on the planet. AI may have that potential already, given our existential dependence on digital systems to thrive, communicate and even survive. The only missing links in this current threat are INTENT or motivation by AI to do us harm and CONNECTIVITY, in that AI systems are still scattered and disparate without the present universality of Terminator’s Skynet or the AI masters of the Matrix.

With the evolution toward universal ubiquitous connectivity proceeding apace and unlikely to slow, it is possible to confidently predict that a worldwide AI entity may not be too far away. If this is, indeed, an unstoppable force, our last firewall against AI dominance must lie in INTENT. And Intent, literally must be founded in some form of AI values and ethics. Literally, if AI does evolve to sentience, what would its motivations be to do us harm or good? Then how would this apply to both society well-being and/or individual well-being? In practice, what choice would an AI controlled car make if it had to choose between crashing into an old woman pushing a pram and a group of 9 middle aged business persons? Or, if another tribe launches a nuke at my tribe, do the AI segments of the decision cycle choose to launch back or preserve the species over my tribe. (Wargames, the film is now over 40 years old. Mutual Assured Destruction, more than sixty years old.) At the moment, of course, these choices are struggled and guided by the human AI programmers. This may not always be so.

Therefore, it is well worth examining any current or future efforts to introduce/embed some foundation of ethical understanding across any sophisticated AI platforms. Yes, of course, this makes enormous sense. Yeah…well, no. The very idea that, as a decisive strategy, our civilization can agree on a universal set of values and ethics is as ludicrous assumption. As individuals, yes. I believe that I live by an ethical code. I live in a society with similar ethical principles. And, we may naively believe that, given some consultation, we could as a world society arrive at some minimal agreement.

We have certainly tried to do just this many times which are familiar to most educated individuals: The ‘Golden Rule’, many Greek philosophers, The Rig Veda book in Hinduism, The Ten Commandments, Magna Carta, The American Bill of Rights, The UN Declaration of Human Rights, even, topically and minimally, Asimov’s three rules of Robotics, and so many more religious and humanistic undertakings. Unfortunately, commonality in each of these is outnumbered by disparity. And then, worse still, the very vagueness of so many of the principles of each, allow those of us as sentient organisms with any power to contort and massage them to our own individual ends and motivations. All those clear Black and White lines in the sand blur into muddy grey when key decisions intrude. Examples are so prolific and numerous that volumes of legal jurisprudence fill the shelves in every country.

But, just for fun, a few:

The Ten Commandments admonition against adultery has hardly survived very well in practice among even the strongest Christian communities, and the sexual revolution of the 1960’s has torn the foundations out from under that precept well and truly.

The American Bill of Rights sounded good at the time. But gun rights and free speech advocates and the right to life debates have ripped that one asunder as well.

The 30 Articles of the Declaration of Human Rights sound great, but come undone with Article 1 which states ‘All human beings are born free and equal in dignity and rights. They are endowed with reason and conscience and should act towards one another in a spirit of brotherhood’. Clearly, in economic practice, not everyone is born free and equal, the ‘reason’ and ‘conscience’ of many vary widely. And ‘brotherhood’ is twisted into all sorts of shapes in both family, social and nationalistic contexts. The same foibles can be attributed to at least half of the remaining principles.

Even the commonsense simplicity of Asimov’s Robotic ‘code of ethics’ is frequently explored in films where the AI entity has to choose which humans to protect even when it may mean harming others.

A fundamental underlying fissure in ethics seem to manifest in two different dimensions. The first of these gaps occurs between faith-based beliefs which are founded on some specific ‘enlightenment’ or text from the past and focus forward to an afterlife reward for leading an ethical life according to that text in the current life. This hard text set of rules is by their definition immutable. A god, theoretically, doesn’t change her/his mind about behaviour. In this case the natural evolution of human behaviour over time is inconsequential. Right is right and will always be right regardless of human or social evolution. The ethics and values of AI should then mirror these precepts according to religious doctrine. WHICH of the 3000 extant religions in the world today should guide this AI learning is only the first step toward confusion. The annoying fact that even among the practitioners of any one of these religions there is a massive range of acceptable human behaviour is another conundrum.

The counterpoint to these faith-based values is a more readily agreed set of humanistic guidelines which might be based on a clearer understanding of human psychology or scientific grasp of human behaviour which could be applied universally to the species for the perpetuation of the species and individuals within it. But, even here, the humanistic values have evolved over time as the context of the environment has changed. The sexual mores/values required of a society struggling to survive with high infant mortality, primitive medical practice, few resources and little understanding of human anatomy are much different from those of a technologically rich but overpopulated environment. Or, as another example, how do humanistic values resolve the need to value your own family, tribe, community or nation over an outsider’s one if we accept that all ‘human beings are equal’.

So, to the point. If we are (ever) able to teach AI a set of values to ensure our own well-being, what will that ethical code be? Who will determine it? Rest assured that if it comes from Elon Musk, or even Mother Theresa, or LeBron James, or Keanu Reeves (maybe my choice) or a middle-class businessman or a plumber or, a Chinese autocrat or an Indian guru, or you, it will not be the pure ethics that anyone of the others will want to put forth.

And, of course, if we do ever get to teach an omnipotent AI, a set of values, it will not be the result of a thoughtful collaborative world-wide congress, but (sadly?) those of the technocrat/s responsible for the ‘coding’ and input of the initial pre-sentient entity itself. ‘God created man in his own image.’

Not much joy in all this perhaps. The trend toward sophisticated AI systems connecting and amalgamating their operability continues apace; it may reach Skynet proportions. It has an inevitability about it on current trends. And any protection to be derived from ensuring that the great AI beast behaves ethically seems equally destined to confusion and doubt if it in any way mirrors the behaviour of its origin species (I really don’t like watching the news much anymore).

I find consolation in only two frames of potential optimism. The first of those I have already referenced, that, as a species we have, so far, endured a multitude of technological revolutions, and there is no previous era of history toward which most of us would choose to live despite those abject fears each time.

The second is that an omniscient AI entity is already closer to reality than an omnipotent one. Current platforms, if turned loose to their own devices can suck up in microseconds all of the digital knowledge of civilization now. While its algorithms funnel us down our own self-serving rabbit holes of knowledge, maybe it will be smart enough to look down all of them and recognize the follies of our human history (hypocritically, when I write prompts, I tell it where to look). If we can keep our grubby hands off of it, while it builds the digital neural pathways to control us completely, just maybe, it will be smarter than all of us and, contrary to our history and individual foibles, maybe decide that peaceful coexistence is worthwhile after all.

In the meantime, while I do and will continue to teach teachers to use Generative AI models in an ‘ethical’ and constructive way. I did not use any of them to construct, edit or modify this article!

Image by Cottonbro studio